Data has become the lifeblood of modern enterprises, yet finding and understanding the right data remains one of the most persistent challenges facing organizations today. Business users can’t locate what they need. Data teams spend the majority of their time answering repetitive questions instead of driving innovation. Valuable insights remain locked away in sprawling data landscapes. And despite massive investments in data catalog and governance tools, adoption rates remain stubbornly low. But AI data catalogs are flipping the script.

This isn’t a new problem, but the solution is. An AI data catalog represents a fundamental transformation in how organizations approach data management, moving beyond static metadata repositories to create intelligent systems that understand intent, learn from usage, and meet users where they work. These AI-powered catalogs don’t just document data—they actively help users discover it, understand it, and trust it.

The shift represents more than incremental improvement. Where traditional catalogs failed by adding complexity to solve complexity, AI-powered solutions ingest that complexity and output simplicity. They eliminate the need for extensive training, reduce the manual burden on governance teams, and create a virtuous cycle where every interaction makes the system smarter. For organizations drowning in data chaos, AI catalogs offer a path to scalable, self-service data practices that actually get adopted.

The adoption crisis traditional data catalogs can’t solve

Despite substantial investments, traditional data cataloging solutions face a persistent adoption problem. Organizations can spend hundreds of thousands of dollars implementing enterprise catalogs, only to find that months later, adoption remains stubbornly low. In fact, research shows that between 50% and 90% of data governance initiatives fail at the first attempt, with Gartner predicting that 80% of data and analytics governance initiatives will fail by 2027 without a compelling business crisis to drive adoption.

The issue isn’t a lack of features—it’s complexity masquerading as capability. The global data catalog market is growing at a remarkable 20.5% CAGR, reaching $871 million in 2024 and projected to hit $5.62 billion by 2034, yet adoption at individual organizations remains surprisingly low.

The real cost of low adoption

When traditional catalogs fail to gain traction, organizations pay a steep price that extends far beyond the initial investment. Without an effective catalog, data teams become “human catalogs”—spending the bulk of their days answering the same questions repeatedly instead of building new capabilities.

As Tristan Mayer, Co-founder of CastorDoc (now Coalesce Catalog), notes, this pattern prevents organizations from achieving what matters most:

“When you want to build a scalable data practice where your business users can actually self-serve and feel confident and trust the data that you’re delivering to them, you’re doing the hardest part of the job—which is delivering trusted data.”

— Tristan Mayer, Co-Founder of CastorDoc (now Coalesce Catalog)

AI data catalogs break this cycle by enabling self-service discovery, freeing data teams to focus on strategic initiatives while empowering business users to find and understand data on their own.

Why traditional catalogs struggle with adoption

Traditional catalogs were built on the assumption that governance is about control and compliance. They emerged from regulatory requirements, designed to lock things down and enforce processes. This compliance-first mentality created tools that felt more like constraints than enablers. Business users avoided them, data engineers found workarounds, and the catalog became a checkbox exercise rather than a living resource.

The maturity gap is stark. Research shows that 93% of organizations at the highest data maturity level (Level 4) have established business data lineage, compared to only 26% at Level 1, with overall adoption across respondents at just 51%. This disparity reveals that while advanced organizations reap significant benefits, most struggle with foundational capabilities.

The fundamental problem with complex solutions

You can’t solve a complex problem with a complex solution. “The reason why you need governance is because your data environment has grown too quickly, too big, and you can’t control and you don’t know what you have,” shares Satish Jayanthi, CTO and Co-founder of Coalesce, in a recent podcast. “And if you add another layer of complexity, it’s not solving anything. So it defeats the entire purpose of why you’re looking for governance in the first place.”

When data environments grow rapidly with sprawling tools, diverse teams, and federated architectures, adding another layer of complexity defeats the purpose. Organizations need governance tools because their data ecosystem has become too complex to manage—introducing an equally complicated catalog only compounds the problem.

This is where AI fundamentally changes the equation. The challenge, as Tristan Mayer articulates, is clear:

“You need to ingest all that complexity, and then the output needs to be simple, easy, understandable by a bunch of different roles, tools, people. And that’s where the magic of doing it right really resonates or not.”

— Tristan Mayer, Co-Founder of CastorDoc (now Coalesce Catalog)

Why AI changes everything: From static repository to intelligent assistant

AI transforms data catalogs from passive documentation systems into active participants in the data lifecycle. Rather than simply storing metadata, AI data catalogs understand intent, resolve ambiguity, and surface the right information at the right moment.

The transformation extends beyond cataloging alone. AI is fundamentally reshaping data engineering workflows, automating pipeline generation, enabling self-healing systems, and accelerating development velocity. When embedded in data catalogs, these same AI capabilities make governance automatic, documentation continuous, and discovery intuitive—creating a unified intelligence layer across your entire data infrastructure.

Three critical use cases where AI data catalogs deliver immediate value

1. Empowering governance teams through automation

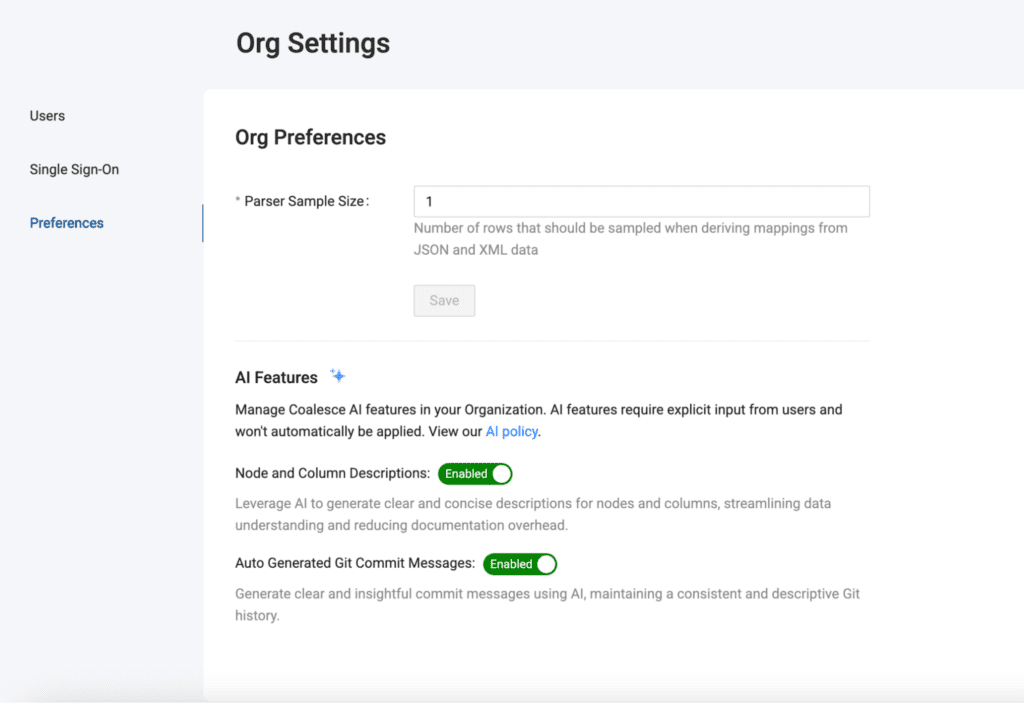

AI dramatically reduces the manual burden of governance work. Instead of data stewards painstakingly writing descriptions for thousands of tables and columns, AI can generate contextual documentation automatically by analyzing schema structures, column names, transformation logic, and usage patterns.

How AI assists governance teams:

- Auto-generate accurate descriptions from technical metadata and business context

- Identify and classify sensitive data like PII, PHI, and financial information automatically

- Create intelligent policies based on organizational patterns and usage history

- Translate complex lineage into natural language explanations that business users understand

This doesn’t replace human oversight—it amplifies it. Governance professionals can focus on defining strategy and reviewing AI-generated content rather than manually documenting every data asset. As organizations adopt modern data governance frameworks, AI-powered catalogs become the enabling technology that transforms governance from a bottleneck into an accelerator.

Real-world example: Coalesce AI Documentation AssistantCoalesce AI Documentation Assistant allows data teams to spend less time documenting and more time innovating. What it does:

The benefits:

The outcomes:

|

2. Enabling natural language data discovery

The most transformative AI capability is natural language search. Users no longer need to understand database schemas, navigate metadata trees, or write exploratory SQL. They ask questions in plain English and receive relevant, ranked results.

When a business analyst asks, “What tables contain customer loyalty information?” the AI data catalog doesn’t just perform keyword matching. It understands context, interprets synonyms, considers usage popularity, and ranks results based on data quality. It can even correct spelling errors and handle ambiguous queries intelligently.

Key benefits of natural language search:

- Zero learning curve for non-technical users

- Contextual understanding that goes beyond simple keyword matching

- Intelligent ranking based on data quality, usage, and relevance

- Accelerated time to insight for technical and business users alike

As Coalesce Field CTO Doug Barrett explains: “With Coalesce Catalog, it’s find, it’s understanding, and it’s trust. You’ve got AI assistance there where you can write natural language and find what you’re looking for. That’s really powerful.”

This represents a fundamental shift: data discovery that begins with a question, not a training manual.

Real-world example: Coalesce Catalog Natural Language SearchWith Coalesce Catalog’s NLS capabilities, users ask for data in plain English right in Slack, Teams, or alongside your BI. Coalesce’s AI data data catalog surfaces certified answers and shared definitions so teams align fast. Discover data in business context

Understand how data is defined and used before you query it.

|

3. Accelerating analytics with SQL copilots

For technical users, AI-powered SQL copilots represent a breakthrough in productivity. Analysts spend less time writing boilerplate queries and more time analyzing results. The AI suggests query structures, auto-completes table and column names, and helps optimize complex joins.

What SQL copilots deliver:

- Intelligent query suggestions based on context and usage patterns

- Automatic joins between related tables using lineage information

- Performance optimization recommendations for complex queries

- Error detection and correction before queries run

The result is faster time to insight for analysts and reduced load on data engineering teams answering basic SQL questions.

Real-world example: Coalesce Copilot

Coalesce Copilot helps data engineers streamline development, governance, and collaboration. It’s like a senior teammate who knows your stack inside and out. It helps teams:

Key capabilities:

|

Meeting users where they work: The key to AI data catalog adoption

One of the most important lessons from successful catalog implementations is that adoption requires meeting users in their existing workflows. As Satish Jayanthi emphasizes:

“Meeting the user where they are—that’s another big thing I’ve seen with this. If you spend all your time in Slack, you don’t really want go somewhere else to ask a question or discover something.”

— Satish Jayanthi, CTO and Co-Founder of Coalesce

If data discovery requires context switching to a separate application, adoption will remain low regardless of features. AI-powered catalogs integrate directly into the tools where work happens:

Slack and Microsoft Teams Integration: Users ask data questions directly in chat channels where their teams collaborate. “Where can I find customer retention metrics?” typed into Slack receives an instant, contextually relevant response with links to datasets, dashboards, and documentation.

BI Tool Integration: When analysts work in Tableau, Power BI, or Looker, catalog capabilities surface directly in the tool interface. Hovering over a field shows its definition, lineage, and quality score without leaving the analytics environment.

IDE Integration: For data engineers working in development environments, catalog capabilities appear inline with code. Column suggestions include business definitions. Query validation checks against certified data sources. Documentation updates automatically as transformations change.

Customer success story: 6x adoption at DoctolibDoctolib, Europe’s leading healthcare technology company, transformed their data culture by replacing a struggling traditional catalog with Coalesce Catalog—and the results speak for themselves. The Challenge: With their previous catalog, Doctolib’s Data Governance team struggled to reach more than 100 monthly active users. Manual processes for PII and PHI classification made the catalog feel like an enforcement tool rather than an enabler, stifling adoption across their 60+ feature teams. The Result: At rollout, Coalesce Catalog immediately hit 600 active users—a 6x increase—and grew to over 900 users across product managers, engineering managers, security teams, and data professionals. The Key Difference: “People are finally contributing—commenting, documenting, and keeping the catalog alive, instead of our team carrying it alone,” says Diana Carrondo, Data Governance Lead at Doctolib. By shifting classification rules from the catalog interface into code with version control, the catalog became a “store window” for data discovery rather than a compliance checkpoint. |

The virtuous cycle of usage and intelligence

Tristan Mayer describes how modern catalogs create a positive feedback loop: “We’re making sure that our catalog doesn’t just provide data, but that it also learns from usage to build a smarter, more adaptive governance ecosystem. Questions downstream generate context that improves documentation, reveals issues, and trains the model. It becomes a virtuous cycle: the more people ask, the better the system gets.”

This represents a fundamental shift from traditional static catalogs to learning systems that become more valuable with every interaction.

Governance that doesn’t require training

Satish Jayanthi emphasizes a critical principle: “If I have to say one word, it’s the simplicity. It is so simple to start, and that’s how you get the adoption. Because governance and regulation, it’s too complicated. A lot of times, it’s overblown, and you start with a big bang approach—and then you don’t get anywhere with the data strategy.”

If your catalog requires extensive training for anyone to get value out of it, chances are it won’t be widely adopted. The philosophy shared by leading AI-powered catalog providers centers on intuitive design, as Coalesce CEO Armon Petrossian explains: “One of the core philosophies is to keep it simple, have the product be intuitive, have it be approachable, and allow it to serve a much broader audience.”

Capturing tribal knowledge before it walks out the door

Coalesce Data Engineer Josh Hall highlights a critical benefit often overlooked: “I think there’s a really important factor here of not having to worry about someone on the team walking away with tribal knowledge. That all gets captured within the catalog. Your team always has an understanding of how we’re defining metrics, how columns are defined, which I think is really important when it comes to scalability of that pipeline over time, of your data practice over time.”

When AI-powered catalogs automatically document transformations, capture business context, and preserve decision rationale, organizations build institutional knowledge that survives team changes and accelerates onboarding for new members.

Understanding agentic AI: Your personal data chef

Perhaps the best way to understand agentic AI in data cataloging comes from a powerful analogy: Think of the role of agentic AI in data governance and discovery like having your own personal chef. You don’t need to know what’s in the pantry or how to prep ingredients. You say, “I want spaghetti,” and the chef handles everything, from shopping to cooking to serving.

Agentic AI does the same for data—it doesn’t just search metadata, it understands intent.

What agentic AI can do:

- Infer the best table or metric based on usage patterns: When you ask about “revenue,” the AI knows which of the 15 revenue tables is most appropriate for your role and use case

- Resolve ambiguity in natural language queries: “Show me customer data from last quarter” becomes a specific query against the right certified table with proper date filtering

- Automatically pull in relevant documentation, lineage, and ownership context: Every result includes not just the data location but the full context needed to use it properly

- Learn from previous interactions to improve over time: The system remembers that when your finance team asks about “bookings,” they mean ARR, not room reservations

This level of intelligence represents a fundamental leap beyond traditional search. It’s proactive assistance, not passive retrieval.

AI data catalog implementation & best practices

Based on working with hundreds of organizations implementing AI-powered catalogs, several patterns consistently predict success:

Start with a high-value pilot domain

Rather than attempting enterprise-wide deployment immediately, begin with a specific business domain where data challenges are acute and stakeholders are engaged. Marketing analytics, customer success metrics, or supply chain data often make excellent pilots because they have clear business impact and enthusiastic users.

What makes a good pilot:

- Clear business pain point that catalog can address

- Executive sponsor who champions adoption

- Manageable scope (50-200 data assets initially)

- Mix of technical and business users to validate value

- Success metrics defined upfront

Prioritize quick wins over perfect documentation

The enemy of catalog adoption is perfectionism. Organizations that attempt to fully document every asset before launch never launch. Instead, prioritize breadth over depth initially.

- Phase 1 (Week 1-2): Connect sources and enable basic search across all assets

- Phase 2 (Week 3-4): Add AI-generated descriptions and enable natural language queries

- Phase 3 (Week 5-8): Layer in lineage, quality scores, and usage analytics

- Phase 4 (Month 3+): Refine governance policies and expand to additional domains

This phased approach delivers value quickly while continuously improving coverage and quality.

Embed catalog champions in each department

Technology alone doesn’t drive adoption—people do. Identify data-savvy individuals in each business unit who can evangelize the catalog, answer questions, and provide feedback to the central team.

Champion responsibilities:

- Demonstrate catalog capabilities in team meetings

- Document common use cases from their domain

- Collect and communicate user feedback

- Validate that AI-generated content makes sense for their business context

Measure what matters: Adoption and impact metrics

Track metrics that indicate real adoption and business value:

- Active users: Percentage of potential users who access the catalog monthly

- Query success rate: Percentage of searches that lead to useful results

- Time to discovery: How long it takes users to find relevant data

- Support ticket reduction: Decrease in “where is the data” questions

- Documentation coverage: Percentage of assets with meaningful metadata

Leading organizations review these metrics monthly and adjust their approach based on what drives improvement.

The ROI of AI-powered catalogs

When evaluating catalog investments, consider both tangible and intangible returns:

Reduced time to insight:

Data teams spend approximately 30% of their time trying to find and understand which data they can trust, according to industry research. AI data catalogs dramatically reduce this discovery time through natural language search and automated documentation. For a team of 10 analysts at a $100K salary, even a 20-30% reduction in search time represents $60,000-$90,000 in recaptured productivity annually.

Decreased data team burden:

Traditional approaches force data teams to spend the majority of their time answering repetitive questions instead of building strategic capabilities. AI catalogs enable self-service discovery, allowing data teams to redirect effort toward innovation and high-value projects rather than serving as “human catalogs.”

Faster onboarding:

Comprehensive, searchable documentation in AI-powered catalogs accelerates new team member productivity. Industry research shows AI data catalogs can reduce onboarding time from 4 weeks to 2 days, transforming a month-long process into a streamlined experience. For organizations hiring rapidly, this dramatically accelerates time to value.

Reduced compliance risk:

Automated sensitive data classification and access controls reduce compliance violations. According to IBM’s 2023 Cost of a Data Breach Report, the average cost of a data breach reached $4.45 million—making proactive data governance through AI catalogs a critical investment in risk mitigation.

Strategic advantages

Data democratization:

Self-service data access empowers business users to answer their own questions, reducing dependence on technical teams and accelerating decision-making cycles.

Institutional knowledge preservation:

Capturing tribal knowledge in the catalog protects organizations from the risk of key personnel departures and makes expertise accessible across the organization.

Data quality improvement:

Increased visibility into data quality issues drives faster remediation. Organizations typically see 30-40% reduction in data quality incidents within the first year.

Scalable governance:

As data volumes and complexity grow, AI-powered catalogs scale governance capabilities without proportional increases in headcount.

Common implementation challenges and how to overcome them

Challenge: Resistance from technical teams

Symptom: Data engineers view the catalog as extra work rather than a productivity tool.

Solution: Demonstrate how automation reduces manual documentation burden. Show how lineage tracking speeds impact analysis. Integrate catalog updates into existing workflows (CI/CD, transformation pipelines) so documentation happens automatically.

Challenge: Stale metadata

Symptom: Documentation becomes outdated as schemas and logic change.

Solution: Implement automated metadata refresh schedules. Configure change detection alerts. Integrate with transformation tools like dbt or Coalesce so documentation updates propagate automatically. Enable users to flag outdated content directly from the catalog interface.

Challenge: Low initial adoption

Symptom: Users default to asking colleagues instead of searching the catalog.

Solution: Make catalog search the path of least resistance. Integrate into Slack/Teams where questions naturally occur. Create compelling launch communication highlighting specific use cases. Celebrate early adopters and share success stories. Make catalog usage part of onboarding for all new hires.

Challenge: Unclear ownership and accountability

Symptom: No one takes responsibility for catalog content quality.

Solution: Establish a clear data ownership model with named stewards for each domain. Make ownership visible in the catalog itself. Create escalation paths for metadata disputes. Include catalog content quality in performance reviews for data team members.

Challenge: Integration complexity

Symptom: Connecting to diverse data sources requires extensive custom development.

Solution: Prioritize catalog platforms with pre-built connectors to your core infrastructure. Start with fewer, well-integrated sources rather than superficial connection to everything. Invest in quality metadata from key systems before adding peripheral ones.

Looking ahead: The future of AI-powered data catalogs

The evolution of AI-powered catalogs continues to accelerate. Several emerging capabilities will further transform how organizations manage and activate their data:

Predictive data discovery:

Future catalogs won’t wait for users to search—they’ll proactively surface relevant data based on current projects, role, and typical usage patterns. When you open a new analytics project, the catalog suggests the exact tables and metrics you’ll likely need.

Automated data quality management:

AI will move beyond detecting data quality issues to automatically remediating them. When freshness degradation is detected, the catalog will trigger refresh processes. When schema drift occurs, documentation updates automatically and downstream systems receive notifications.

Conversational data experiences:

Natural language interfaces will evolve into full conversational experiences where users can ask follow-up questions, request clarifications, and even ask the AI to generate analyses or visualizations based on discovered data.

Cross-organization data collaboration:

As data mesh architectures proliferate, catalogs will facilitate discovery and governance across organizational boundaries, enabling secure data sharing and collaboration while maintaining appropriate controls.

Conclusion: The path forward

The fundamental shift from traditional to AI data catalogs isn’t about incremental improvement—it’s about reimagining what data governance and discovery can be. By embedding intelligence throughout the data lifecycle, organizations transform catalogs from compliance checkboxes into strategic assets that accelerate decision-making, reduce risk, and empower users across the organization.

The question is no longer whether to adopt AI-powered cataloging, but how quickly you can get started. Organizations that move now gain compounding advantages: better data literacy, faster insights, reduced governance burden, and institutional knowledge that survives team changes.

Success requires commitment to simplicity, focus on user adoption, and willingness to learn from usage patterns. But for organizations willing to make that investment, the returns are substantial and sustainable.

Take action: Start your AI-powered catalog journey

Ready to experience the future of data cataloging? Discover how AI-powered catalog capabilities can transform your data discovery, governance, and adoption. Book a demo to see these capabilities in action, or explore our latest AI data catalog resources to learn more about building a trusted knowledge bank for your organization.

Further reading and resources

To deepen your understanding of AI-powered data catalogs and related concepts:

- What Is a Data Catalog? Tools, Examples, and Benefits – Comprehensive guide to data catalog fundamentals, core capabilities, and implementation best practices

- Top 10 Data Catalog Tools in 2025 – Detailed comparison of leading catalog solutions, including AI-powered, cloud-native, and open-source data catalog options

- AI in Data Engineering: The Complete Guide – How AI is reshaping data engineering workflows, from pipeline generation to automated governance

- Data Governance: Ensuring Data Integrity and Compliance – Framework for implementing effective governance that enables rather than constrains

- Metadata Management: Building an AI-Ready Data Foundation – Complete guide to metadata management strategies, from passive documentation to active operational intelligence