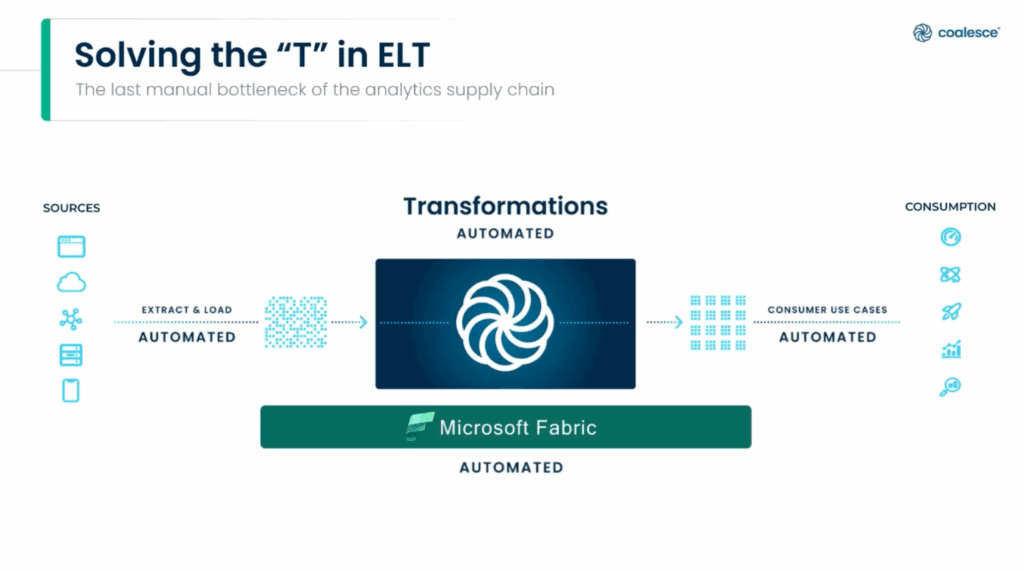

Microsoft Fabric has emerged as a unified analytics platform that promises to streamline the entire data lifecycle—from ingestion to insights. For data engineers and architects working within the Microsoft ecosystem, Fabric represents a significant shift toward consolidation and simplification. However, as organizations adopt this platform, one critical challenge continues to demand attention: data transformation.

Key Insight: The “T” in ELT remains the most complex, time-consuming, and maintenance-heavy part of the data pipeline. While Fabric provides powerful tools for extracting and loading data, as well as excellent visualization capabilities through Power BI, the transformation layer often becomes a bottleneck that slows teams down and introduces technical debt.

This comprehensive guide explores the landscape of data transformation in Microsoft Fabric, examining the available options, common challenges, and best practices for data engineers. More importantly, it reveals how modern transformation platforms like Coalesce can help teams build faster, scale more efficiently, and maintain data quality without the typical overhead.

Understanding Microsoft Fabric’s data transformation ecosystem

Microsoft Fabric consolidates multiple data services into a unified SaaS platform, built on a foundation called OneLake. This architecture enables organizations to centralize their data storage while leveraging different compute engines for specific workloads. For a comprehensive overview of all Fabric capabilities, see Microsoft Fabric’s official documentation.

For data transformation specifically, Fabric offers several native approaches:

| Transformation Approach | Best For | Key Limitations |

|---|---|---|

| Data Factory Pipelines | Orchestration & data movement | Manual configuration, difficult to standardize |

| Dataflow Gen2 | Low-code visual transformations | Performance issues at scale, limited version control |

| Spark Notebooks | Complex programmatic transformations | High maintenance, code duplication |

| SQL-Based (T-SQL) | Structured data transformations | Difficult dependency management |

When evaluating which transformation approach to use, Microsoft’s decision guide provides detailed scenarios and recommendations.

Data Factory pipelines

Data Factory in Fabric serves as the primary orchestration tool, connecting to over 170 data sources and enabling both ETL and ELT workflows. Pipelines handle data movement and can invoke various transformation activities, including:

- Notebooks for Spark-based processing

- Stored procedures for database transformations

- SQL scripts for data manipulation

- Custom activities for specialized logic

Strengths: Excellent orchestration capabilities, wide connector support

Challenges: Requires significant manual effort for complex transformation logic, difficult to standardize patterns across teams

Dataflow Gen2

Dataflow Gen2 provides a low-code interface with over 300 transformations. Key features include:

- Visual drag-and-drop interface

- Query folding capabilities (pushes transformations to source systems)

- Integration with Power Query

- Support for data cleansing and shaping

Strengths: Accessible to analysts and citizen developers

Challenges: Performance becomes unpredictable with large datasets, version control remains a challenge, limited support for complex business logic

For teams using Dataflow Gen2 extensively, Microsoft’s performance optimization guide provides actionable techniques.

Spark notebooks

Fabric Data Engineering leverages Apache Spark for processing large-scale datasets. Data engineers can write PySpark or Scala code in notebooks to implement sophisticated transformation logic with full programmatic control.

Strengths: Maximum flexibility, handles large-scale data processing

Challenges: Each transformation requires custom code, creating maintenance burdens and potential inconsistencies, teams struggle with code duplication and poor visibility

SQL-based transformations

Fabric Warehouse supports T-SQL for data transformations, allowing engineers to implement business logic through stored procedures, views, and SQL scripts.

Strengths: Familiar to database administrators and SQL-proficient engineers

Challenges: Managing hundreds of stored procedures, ensuring proper execution order, and tracking dependencies becomes increasingly difficult

The core challenges of data transformation in Microsoft Fabric

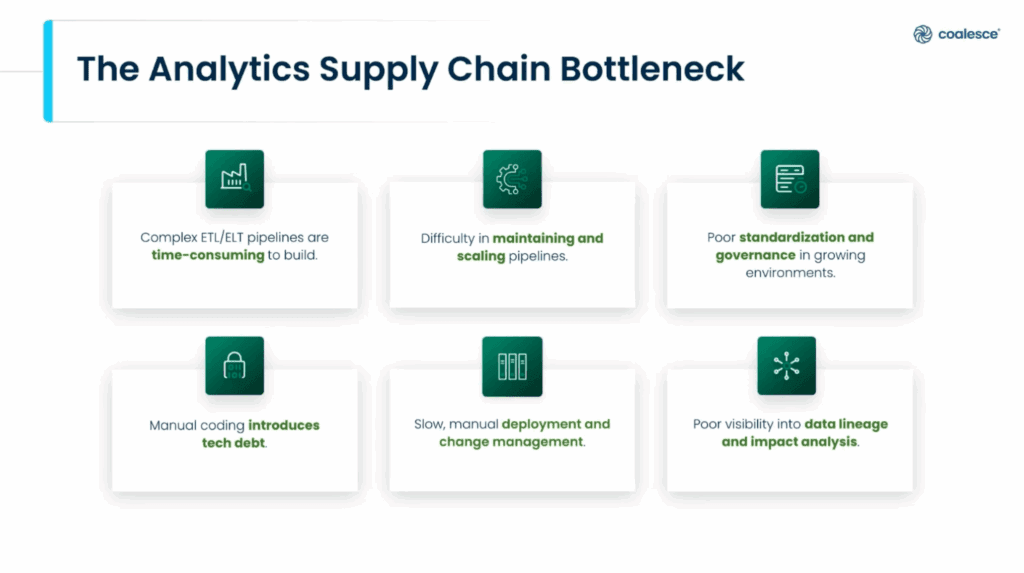

Despite Fabric’s comprehensive toolset, data engineering teams consistently encounter several pain points when building transformation pipelines:

Development velocity

Building complex transformation pipelines in Fabric requires significant time investment. Whether writing PySpark notebooks, configuring Data Factory activities, or creating Dataflow Gen2 sequences, each transformation demands hands-on coding or configuration work.

Impact: For a typical enterprise data warehouse with hundreds of tables and thousands of transformation steps, development cycles can stretch into months. This slow pace prevents teams from responding quickly to changing business requirements or new data sources.

Technical debt accumulation

Manual coding inevitably introduces technical debt. Consider these common scenarios:

- Different engineers write code in different styles

- Junior team members implement logic differently than senior engineers

- Inconsistent naming conventions across projects

- Duplicated code across multiple notebooks or procedures

- Undocumented business rules buried in code

Real-World Example: One organization implementing Fabric reported that their transformation logic was scattered across multiple notebooks, with significant code duplication across different workspaces. This fragmentation made it nearly impossible to implement changes efficiently or maintain consistent data quality standards.

Limited visibility and impact analysis

Understanding how data flows through Fabric transformations remains challenging. Teams struggle to answer fundamental questions like:

- “If I change this source column, what downstream transformations will break?”

- “Which reports depend on this table?”

- “What’s the full lineage from source to final report?”

- “How does data quality impact downstream analytics?”

Consequence: This lack of visibility creates anxiety around changes. Teams implement elaborate manual review processes to validate modifications, slowing deployment cycles to weeks or even months.

Standardization and governance gaps

As Fabric environments grow, maintaining standards becomes increasingly difficult. Without enforced templates or patterns:

- Each team develops their own approach to transformation logic

- Naming conventions vary across projects

- Documentation practices are inconsistent

- Security and compliance requirements are hard to enforce

- Code quality varies widely between engineers

Scalability constraints

Building individual transformations works at small scale, but managing hundreds or thousands of transformation objects requires a different approach:

- Manual effort to modify patterns becomes prohibitive

- Applying consistent changes across transformations is time-consuming

- Migrating transformation logic between environments is complex

- Moving between platforms (Fabric to Snowflake) requires extensive rewriting

Brittle deployment processes

Deploying transformation changes in Fabric often involves:

- Manual steps and extensive testing

- Risk of breaking production pipelines

- Infrequent releases batching many changes together

- Rollback procedures that are complex or non-existent

- Limited automated validation

Result: Teams spend more time managing deployments than building new capabilities.

Best practices for data transformation in Microsoft Fabric

The data engineering community has documented extensive best practices for Fabric data warehouses that teams should consider. Despite these challenges, teams can adopt several practices to improve their Microsoft Fabric data transformation workflows:

Embrace the medallion architecture

Organize your data into three distinct layers:

| Layer | Purpose | Transformation Level |

|---|---|---|

| Bronze | Raw data landing | Minimal – load as-is from sources |

| Silver | Cleaned & validated data | Data quality checks, standardization, cleansing |

| Gold | Business-ready datasets | Business logic, aggregations, optimized for analytics |

Benefits of this approach:

– Clear separation of concerns

– Easier to reason about transformation logic

– Enables incremental quality improvements

– Supports data governance requirements

For detailed implementation guidance, see Microsoft’s tutorial on implementing medallion architecture in Fabric.

To understand the foundational concepts of medallion architecture, read the comprehensive breakdown.

Leverage V-order and optimize write

Fabric provides V-order capability to write optimized Delta Lake files. Key advantages:

- Compression: Often improves compression 3-4x (up to 10x in some cases)

- Performance: Significantly faster query speeds

- Storage: Reduced storage costs through better compression

Implementation tip: Enable these features consistently across your transformation pipelines to ensure optimal performance without requiring manual optimization for each table.

# Enable V-order and optimize write in Spark

spark.conf.set("spark.sql.parquet.vorder.enabled", "true")

spark.conf.set("spark.microsoft.delta.optimizeWrite.enabled", "true")

Implement incremental loading patterns

Avoid full table reloads whenever possible. Understanding data ingestion strategies for Fabric Warehouse helps optimize your transformation workflows.

Best practices:

- Process only changed or new data

- Use watermark columns (modified date, sequence numbers)

- Load dimensions before facts to prevent referential integrity issues

- Implement proper dependency management

- Use partition pruning for large tables

Performance impact: Incremental loads can reduce processing time by 90%+ as data volumes grow.

Apply early and frequent filtering

Push filtering operations as close to data sources as possible:

- Leverage query folding in Dataflow Gen2

- Apply WHERE clauses early in Spark notebooks

- Filter at the source when possible

- Use partition pruning for partitioned tables

- Minimize data movement between stages

Establish naming conventions and documentation standards

Define and enforce standards for:

- Database and schema names (e.g.,

bronze_salesforce,silver_crm,gold_analytics) - Table naming (e.g.,

fact_orders,dim_customer) - Column naming (e.g.,

customer_id,order_date,total_amount) - Transformation object names

- Documentation practices

Critical insight: These standards become increasingly important as teams and data estates grow. Without them, knowledge becomes siloed and maintenance becomes exponentially more difficult.

Implement data quality checks

Build data quality validation into transformation pipelines:

| Check Type | Examples | When to Apply |

|---|---|---|

| Completeness | Null checks, required field validation | Bronze → Silver |

| Accuracy | Data type validation, range checks | Silver layer |

| Consistency | Referential integrity, cross-table validation | Silver → Gold |

| Timeliness | Freshness checks, latency monitoring | All layers |

| Uniqueness | Duplicate detection, primary key validation | Silver layer |

How Coalesce accelerates Microsoft Fabric data transformations

Coalesce brings transformational efficiency to Microsoft Fabric by addressing the core challenges that slow down data engineering teams. Rather than replacing Fabric, Coalesce enhances it by providing a purpose-built transformation layer that automates data pipelines and generates optimized code for Fabric, while offering a superior development experience.

Key capabilities comparison

| Capability | Native Fabric Tools | Coalesce + Fabric |

|---|---|---|

| Development Speed | Manual coding/configuration | Visual development with code generation |

| Standardization | Manual enforcement | Built-in templates and governance |

| Impact Analysis | Limited visibility | Automatic column-level lineage |

| Reusability | Copy/paste code | Pre-built packages and templates |

| Version Control | Complex custom setup | Built-in Git integration |

| Multiplatform | Fabric-specific | Works across Fabric, Snowflake, Databricks, Big Query, & Redshift |

Visual development with code generation

Coalesce provides a visual, column-aware interface for building transformation, providing the best of GUI-driven development and code-first flexibility.

Key benefits:

- Design visually: Drag-and-drop interface for transformation logic

- Column awareness: See data types, lineage, and dependencies at the column level

- Code generation: Coalesce generates performant SQL or Spark code automatically

- Full control: Override generated code when needed for special cases

Result: Combine the speed of visual development with the power and flexibility of hand-written code.

Pre-built packages and reusable templates

Through the Coalesce Marketplace, teams access pre-built packages containing common transformation patterns:

- Slowly changing dimension (SCD) Type 1 and Type 2 implementations

- Data quality validation frameworks

- Staging and landing patterns

- Incremental loading templates

- Fact and dimension table patterns

Impact: A transformation that might take days to code and test manually deploys in minutes with a proven, tested package.

Column-level lineage and impact analysis

Coalesce automatically generates comprehensive lineage documentation at the column level:

- See how each column flows from source to destination

- Understand transformations applied at each step

- Identify all downstream dependencies before making changes

- Validate impact analysis in seconds, not days

Transformation Breakthrough: Change management transforms from a weeks-long review process into a quick validation. Teams can confidently make modifications knowing exactly what will be affected.

Built-in governance and standardization

Coalesce enforces transformation standards and governance through reusable node types and templates:

- Every transformation follows organizational patterns

- Consistency regardless of who builds it or when

- Automatic documentation generation

- Security and compliance rules enforced at design time

Benefit: Governance-by-design eliminates the drift that occurs when teams implement transformations manually.

Git-based version control and CI/CD

Coalesce stores all transformation definitions in Git:

- Proper version control for all transformation logic

- Branching strategies for development workflows

- Code review processes before production deployment

- Rollback capabilities when issues arise

- Audit trail of all changes

Multiplatform flexibility

For organizations working across multiple platforms, Coalesce provides:

- Single development interface

- Build transformations once

- Deploy to Fabric, Snowflake, Databricks, Big Query, or Redshift

- Prevent vendor lock-in

- Leverage strengths of different platforms

Accelerated time to value

Organizations implementing Coalesce for Fabric transformations typically report:

- 10x faster development cycles – What previously took months now takes weeks or days

- 80% reduction in maintenance time – Standardization reduces ongoing support burden

- 50% fewer production incidents – Impact analysis prevents breaking changes

- 90% faster onboarding – New team members productive in days, not months

Explore Coalesce Customer Stories >

Real-world transformation scenarios

Understanding how these concepts apply in practice helps illuminate the value of optimized transformation approaches:

Scenario 1: Complex fact table with multiple dimensions

Business requirement: Build a sales fact table that joins data from 12 different dimension tables, applies business rules for revenue recognition, and implements slowly changing dimension handling.

Native Fabric approach:

- Write custom PySpark or T-SQL code for each join

- Implement SCD logic manually (hundreds of lines of code)

- Code business rules for revenue recognition

- Create extensive tests to validate logic

- Document dependencies and transformation logic

- Estimated time: 2-3 weeks

With Coalesce:

- Use dimension lookup node types from marketplace

- Configure SCD handling through pre-built packages

- Define business rules visually

- Automatic lineage and impact analysis

- Coalesce generates optimized code automatically

- Estimated time: 2-3 days

Result: 10x faster development with built-in best practices and governance.

Scenario 2: Data quality framework across bronze to gold layers

Business requirement: Implement consistent data quality checks throughout the medallion architecture, ensuring data meets standards before promotion to each layer.

Native Fabric approach:

- Write custom validation logic in notebooks for each table

- Maintain consistency across hundreds of tables manually

- Create separate dashboards for quality monitoring

- Implement alerting through custom code

- Update validation rules requires touching many objects

- Estimated time: 4-6 weeks

With Coalesce:

- Deploy data quality packages from marketplace

- Configure quality rules once, apply to multiple tables

- Automatic quality metric tracking

- Built-in alerting and monitoring

- Update rules centrally, apply everywhere

- Estimated time: 1 week

Benefits: Consistent quality standards, reduced maintenance, centralized monitoring.

Scenario 3: Migrating transformations from legacy system

Business requirement: Move 500+ transformation processes from an on-premises system to Fabric, preserving business logic while modernizing architecture.

Native Fabric approach:

- Manually rewrite each transformation to PySpark or T-SQL

- Translate legacy code to modern patterns

- Extensive testing to ensure logic preservation

- High risk of logic errors during translation

- Team becomes bottleneck (one engineer per ~50 transformations)

- Estimated time: 6-12 months

With Coalesce:

- Leverage Coalesce Copilot for AI-assisted workflows, migration tools and templates

- Visual interface makes legacy logic easier to understand

- Standardized approach reduces errors

- Accelerated testing through automatic lineage

- Team productivity multiplied through reusable patterns

- Estimated time: 2-3 months

Impact: Faster migration, lower risk, improved maintainability of modernized transformations.

Getting started with optimized Fabric data transformations

For teams looking to improve their Microsoft Fabric transformation workflows, several steps provide immediate value:

Assess current transformation complexity

Conduct an inventory to establish your baseline:

- How many transformation objects do you maintain?

- How long does it take to implement changes?

- Where do you spend the most time (new development vs maintenance)?

- What’s your deployment frequency?

- How many production incidents relate to transformations?

This assessment helps measure improvement and identify high-impact optimization areas.

Identify standardization opportunities

Look for repeated patterns across your transformations:

- Similar logic implemented in multiple places

- Inconsistent approaches to common problems (SCDs, incremental loads)

- Undocumented standards that aren’t followed consistently

- Copy/paste code that could be templated

These opportunities represent quick wins through reusable patterns.

Implement column-level lineage

Understanding data flow at the column level transforms impact analysis:

- See exactly which downstream objects use each column

- Identify breaking changes before deployment

- Document transformation logic automatically

- Enable confident, rapid changes

Whether through Coalesce or other lineage tools, this visibility pays immediate dividends.

Adopt version control and CI/CD

Treat transformation code like application code:

- Store all transformation logic in version control (Git)

- Implement branching strategies for development

- Require code review before production deployment

- Automate testing and validation

- Enable rapid, safe rollbacks when needed

Evaluate transformation platforms

Modern transformation platforms exist specifically to solve the challenges outlined in this guide:

- Start small: Pilot project with subset of transformations

- Measure impact: Track development speed, maintenance time, incident reduction

- Compare approaches: Native tools vs specialized platform

- Validate ROI: Quantify productivity gains and risk reduction

- Scale success: Expand to more projects after validation

The future of data transformation in Fabric

The data transformation landscape continues evolving rapidly. Several trends will shape how teams work with Fabric and other platforms:

AI-assisted development

AI copilots are beginning to help with:

- Generating transformation code from natural language

- Writing documentation automatically

- Suggesting optimizations based on patterns

- Identifying potential data quality issues

- Recommending best practices

Most effective when combined with strong patterns and frameworks.

Semantic understanding

Future transformation tools will better understand business concepts:

- Map technical columns to business terms

- Understand relationships between business entities

- Provide intelligent recommendations based on context

- Enable more automated optimization

- Bridge technical and business perspectives

Continuous optimization

Rather than static transformations:

- Adaptive systems adjust execution strategies automatically

- Performance optimization based on actual data patterns

- Resource allocation tuned to workload characteristics

- Predictive scaling for anticipated demand

Unified metadata management

Cross-platform metadata becomes increasingly critical:

- Single catalog spanning entire data ecosystem

- Consistent governance across platforms

- Unified lineage view end-to-end

- Centralized data quality monitoring

- Platform-agnostic discovery and search

Microsoft Fabric provides a powerful unified platform for data analytics, but data transformation remains complex and time-consuming when approached with native tools alone. The challenges of development velocity, technical debt, limited visibility, and scaling difficulties affect teams of all sizes.

Key takeaways:

- The “T” in ELT is still the bottleneck, even on modern platforms like Fabric

- Native tools require significant manual effort and custom code

- Standardization and governance are critical but difficult to maintain

- Column-level lineage transforms impact analysis and deployment confidence

- Modern transformation platforms like Coalesce address these challenges directly

By understanding these challenges and adopting modern best practices, data engineering teams can dramatically improve their transformation workflows. Purpose-built transformation platforms like Coalesce provide:

- Visual development that generates optimized code

- Reusable components that implement best practices

- Automatic lineage at the column level

- Built-in governance through templates and standards

- Multiplatform flexibility to avoid vendor lock-in

The result: Faster development cycles, higher quality data, reduced maintenance burden, and greater organizational agility.

Bottom Line: For data engineers and architects working with Microsoft Fabric, the question isn’t whether to optimize transformation workflows—it’s how quickly to adopt approaches that eliminate bottlenecks and unlock team productivity.

As data volumes and complexity continue growing, having the right transformation approach becomes increasingly critical for success. The organizations that thrive will be those that can transform data faster, more reliably, and more cost-effectively than their competitors.

Next Steps

Explore these resources to deepen your understanding of Microsoft Fabric and modern data transformation approaches:

- Coalesce Interactive Platform Tour: Take an virtual platform tour to see Coalesce in action

- On-Demand Demo Dive: Discover how Coalesce helps data teams transform data faster at scale, without accruing tech debt

- Coalesce Support for Microsoft Fabric – Learn how Coalesce empowers teams to build data pipelines on Fabric without technical debt

- Coalesce Transform – Explore how Coalesce delivers data transformations with built-in structure, AI capabilities, and integrated governance

- The Coalesce Platform – See how Coalesce helps teams transform and discover data 10x faster

Frequently Asked Questions About Microsoft Fabric Data Transformations

Microsoft Fabric provides several transformation options including Data Factory pipelines for orchestration, Dataflow Gen2 for low-code visual transformations, Spark notebooks for programmatic transformations using PySpark or Scala, and SQL-based transformations through T-SQL in the Fabric Warehouse.

The medallion architecture organizes data into three layers: bronze (raw data as it arrives from sources), silver (cleaned and validated data with quality checks applied), and gold (business-ready datasets optimized for analytics). This pattern provides clear separation of transformation responsibilities and makes logic easier to understand and maintain.

ETL (extract, transform, load) applies transformations before loading data into the target system, while ELT (extract, load, transform) loads raw data first and then transforms it using the platform’s compute resources. Fabric supports both approaches, with ELT generally preferred for cloud-scale data processing as it leverages Fabric’s powerful compute capabilities.

Key performance optimizations include enabling V-order and optimize write features for Delta Lake tables, implementing incremental loading instead of full refreshes, applying filters early in transformation chains, leveraging query folding in Dataflow Gen2, and using proper partitioning strategies for large datasets.

Common challenges include slow development cycles when building transformations manually, accumulation of technical debt through inconsistent code, limited visibility into transformation dependencies and impact, difficulty maintaining standards across growing teams, scalability issues as the number of transformations grows, and brittle deployment processes requiring extensive manual testing.

Coalesce accelerates Fabric transformations by providing visual, column-aware development that generates optimized code, pre-built packages for common patterns, automatic column-level lineage and impact analysis, built-in governance through reusable templates, Git-based version control, and the ability to deploy to multiple platforms including Fabric, Snowflake, and Databricks from a single interface.

Yes, Coalesce supports a multiplatform architecture enabling teams to build transformations once and deploy to Microsoft Fabric, Snowflake, Databricks, and Redshift. This flexibility helps organizations avoid vendor lock-in and leverage the specific strengths of different platforms without multiplying development effort.

Using native Fabric tools, complex transformation projects often require weeks to months depending on the number of tables and complexity of business logic. Organizations using transformation platforms like Coalesce report 10x faster development cycles, reducing projects that previously took months to weeks or even days.

Essential skills include proficiency in SQL and T-SQL for warehouse transformations, Python and PySpark for Spark-based processing, understanding of data warehousing concepts and star schema design, familiarity with ETL/ELT patterns and best practices, knowledge of Delta Lake and medallion architecture, and experience with data quality validation and testing.

Native Fabric has limited version control capabilities. Teams often implement custom solutions using Git repositories for notebooks and scripts, but maintaining consistency across Data Factory pipelines, Dataflow Gen2, and code artifacts remains challenging. Transformation platforms like Coalesce provide built-in Git integration, storing all transformation definitions in version control and enabling proper CI/CD workflows.